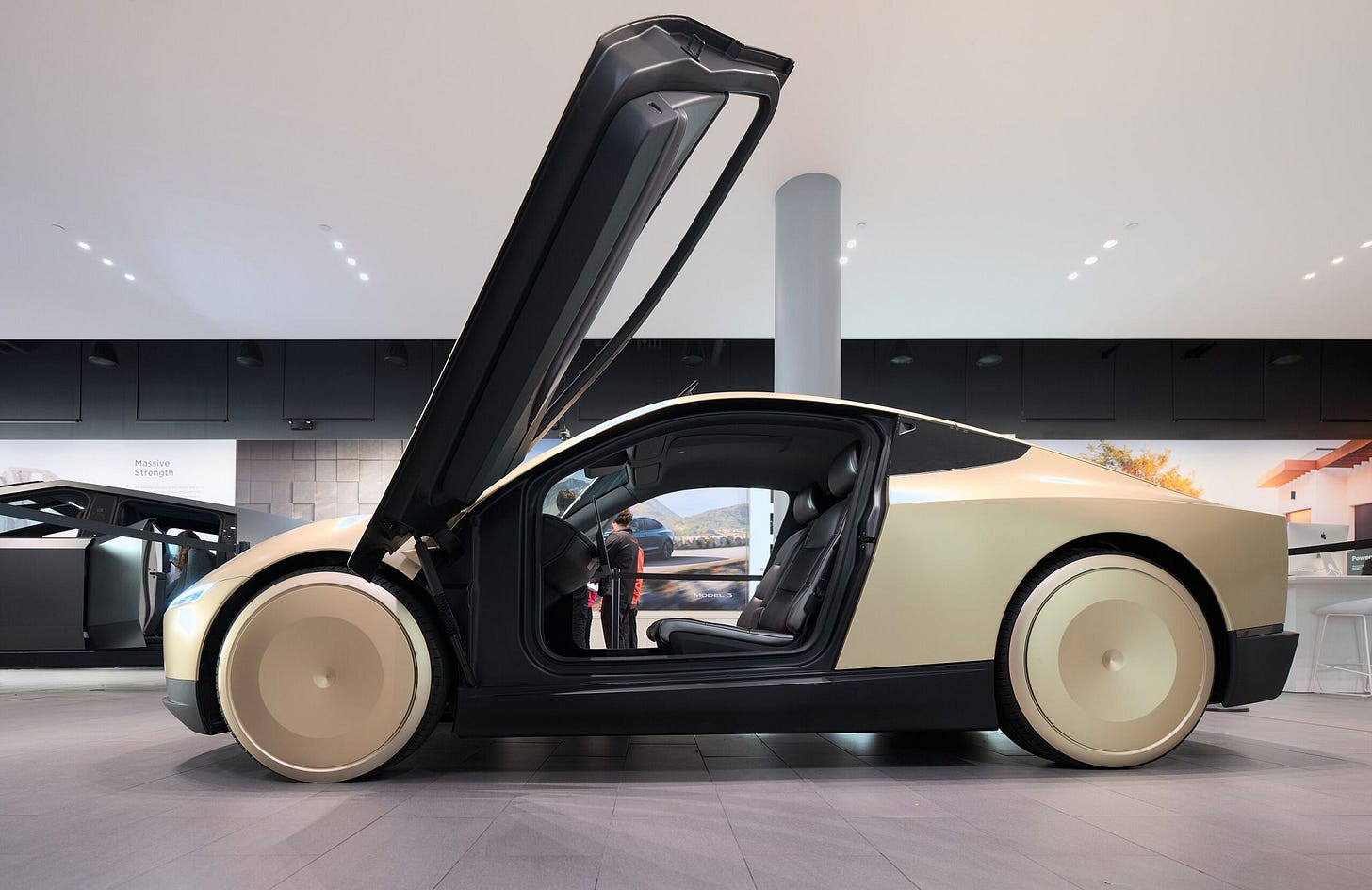

FSD (remote supervised) Tesla Robotaxi

A speculative look at what a Tesla robotaxi deployment in June might look like

My Tesla robotaxi speculative predication is that we will see a Tesla "FSD (remote supervised)" deployment that is marketed as a robotaxi but regulated as an ordinary vehicle. Possibly in June this year. Cybercab to follow using the same deployment model as soon as they can build them at scale.

There has been a ton of speculation about what Tesla plans to put on the roads in June for their robotaxi deployment. Setting aside the possibility that it might be delayed, what will it look like?

Even if the claim of 10,000 miles between critical interventions is accurate, that is very far from good enough for a robotaxi. It seems implausible it will change enough by June to get where it needs to be, even if Tesla is busy training the heck out of an Austin geofenced operating area. It might be Elon’s plan. But it’s not going to happen this year. And, making it a true Level 4 raises regulatory hurdles. So why even go there?

Something I have not heard a lot about is the possibility that it might use a remote driver approach. This gives a number of compelling advantages for practical deployment and regulatory evasion. It allows immediate deployment. (And even if they say they are Level 4, I’ll bet this is what is really under the covers.) But it presents some serious safety problems.

If Tesla chooses a remote driver approach, it amounts to “FSD (remote supervised).” FSD runs with a supervising teleoperator. It could look like this:

There will be an operations center with staff who continuously monitor the vehicle remotely, acting as a teleoperation remote driver to intervene when needed. This will be invisible to the passenger, who has a robotaxi-like experience. No driver is in the car.

One driver/vehicle at first, with significant cost pressure to assign multiple vehicles per driver as the technology matures.

No regulatory oversight beyond what applies to any conventional Level 2 ride hail vehicle if they deploy conventional form factor (Model Y / Model 3).

Tesla will tell states, including California DMV, it is unregulated SAE J3016 Level 2 ride hail.

There will indeed be a remote human driver completing the OEDR subtask. SAE J3016:2021 explicitly permits this per Table 3: Level 2 remote user is called a “remote driver”.

PUC will be told it is a human-driven ride hail. Nothing to see here; give us our license. Or just operate on an existing ride hail network until the license is granted.

When it is time to deploy Cybercab, they Tesla self-certify to FMVSS as a remotely supervised Level 2 vehicle, and ask forgiveness from NHTSA much later. This gambit has worked for Zoox thus far, so no reason Tesla can’t try it too. That gives them time to dig up an exemption loophole, drafting behind Zoox, while both of them operate on public roads.

The Tesla marketing narrative will likely be something like the remote supervisors only technically “drivers” — they are there just to make sure things are safe, and disengagements are rare. Over time they can switch from a continuous supervision model to a call center model with the robotaxis calling in for help when they need to. Just like Waymo already does (but without steering wheels). That switch to on-demand remote assistance would in principle correspond to a switch from SAE Level 2 to Level 4 and mean they are “real” robotaxis. Passengers won’t see any difference at all when that happens. So why not call them robotaxis up front?

Robotaxi success can be claimed immediately. After all, the remote drivers are just there because Tesla cares about safety! 🚀🚀 How long it takes to remove the remote drivers is a matter of profitability, but not an existential issue for the company because the robotaxi goal has been unlocked at long last.

It is worth noting that China requires remote drivers or safety supervisors for their robotaxis. So this is not inventing an entirely new model — it is just applying that same idea to Tesla and the US regulatory system. Waymo and other US robotaxi companies use remote assistants who do not have driving controls, although arguably they have driving responsibility. Companies such as vay.io already remote-drive with teleoperated controls on public roads. So there is plenty of precedent for Tesla to say their approach is “proven” or they are being singled out for criticism if regulators start raising questions.

No regulatory approval will be required for such a deployment other than whatever paperwork is required for any ride hail service involving human drivers. No regulations covering autonomous vehicles will apply because these are “Level 2” non-autonomous vehicles to regulators. However, for ordinary folk they are robotaxis that just have a little help when they need it; same as Waymo, but with a indefinite timeframe promise of no geofencing. This amounts to the same public/regulatory arbitrage playbook Tesla has been using for years.

There will be some major safety concerns:

Communication latency & outages will make it difficult for a remote driver to jump in and prevent mishaps when needed. Even just driving is going to be challenging. Lower city driving speeds might help, but the car needs to be able to handle itself safely during a data communications dropout. Many are skeptical this will work, but there are companies who say they already do remote driving, so we’ll see how this works out.

Tesla will likely argue that it is “unlikely” that a comms dropout will happen just when FSD needs supervisor attention, citing a low disengagement rate by the time they deploy. But unlikely circumstances is precisely where safety problems tend to live.

If the software only occasionally does really dangerous things, remote drivers will have trouble remaining attentive and situationally aware enough to respond. Probably it will be more challenging to provide acceptable safety supervisor efforts compared to being in the car because (1) they don’t have literal skin in the game (how immediate does a potential crash feel if you personally won’t be hurt — despite best intentions?) and (2) they are likely in a building somewhere where it is much easier and tempting to be distracted. It might work, but safety will be hard to pull off, especially as things scale up and a strategy of hiring only the most elite drivers becomes impracticable.

Almost certainly drivers will be blamed for any crash, and will be treated as disposable resources. Have a crash? Fire the driver, and the apparent problem is solved. (Not the real problem, which is remote drivers will struggle to perform well.)

Assigning multiple cars per driver is highly questionable. What if two cars need help at the same time? Will a remote driver really maintain situational awareness for multiple vehicles? There will be economic pressure to do this, and safety will likely degrade if it happens. After some close calls or crashes, probably it will need to be abandoned.

When multiple cars per driver doesn’t pan out, perhaps remote supervisor jobs will be farmed out as gig economy spots. Will we see young drivers using a gaming console to remote-supervise or even remote-drive Tesla robotaxis? Serious safety concerns here in multiple dimensions if this happens. But from a company that tells retail customers they can be test drivers, it could happen. Perhaps if you get a good Tesla “safety score” that unlocks your Playstation or Xbox to be a remote robotaxi driving console.

I am not endorsing this approach. I think we will see serious safety problems. But it does appear to be the most obvious path to deployment given currently available public information.

Of course I could be wrong, and Tesla might do something completely different. I have no insider scoop to support this speculation. This is just a prediction intended purely for entertainment purposes.

For an earlier version of these ideas, see my post from December 2024 about how an unregulated Cybercab might be deployed.

[Many of you might have seen an earlier version of this as a note or LinkedIn post from April 23, 2025. Thanks to the commenters there for their feedback. This is an updated and expanded version that is easier to find in June so we can see how well my crystal ball turned out.]

Phil, thank you for spelling out about a "remote-supervised" FSD scenario. Even if the practice of using a remote operator is not new, this opens a whole new can of worms!

Good post Phil, what's tricky about Tesla and the AV industry in general is that there aren't binary measures of safety. It's all a bit subjective and anecdotal. I have people telling me that Waymo is not safe yet I have taken dozens of rides, including an 80 minute one the other day and feel completely safe. Obviously it's not a big sample size but I don't personally think Tesla is at Waymo's level of safety.

So come June, I won't be sitting in the back of a Tesla robotaxi but I know some/maybe many people will :)