Tesla Deployment Gets Messy. Should we worry?

So much debate and speculation, and so little transparency from Tesla.

Tesla robotaxis are on the road, and it’s starting to get messy. Here is my shot at trying to sort out what we should be worried about, and what is no surprise. This is a supplement to my recent Tesla robotaxi launch post, which you should review if you missed it.

It is not time to worry, quite yet. But it is definitely time to pay attention.

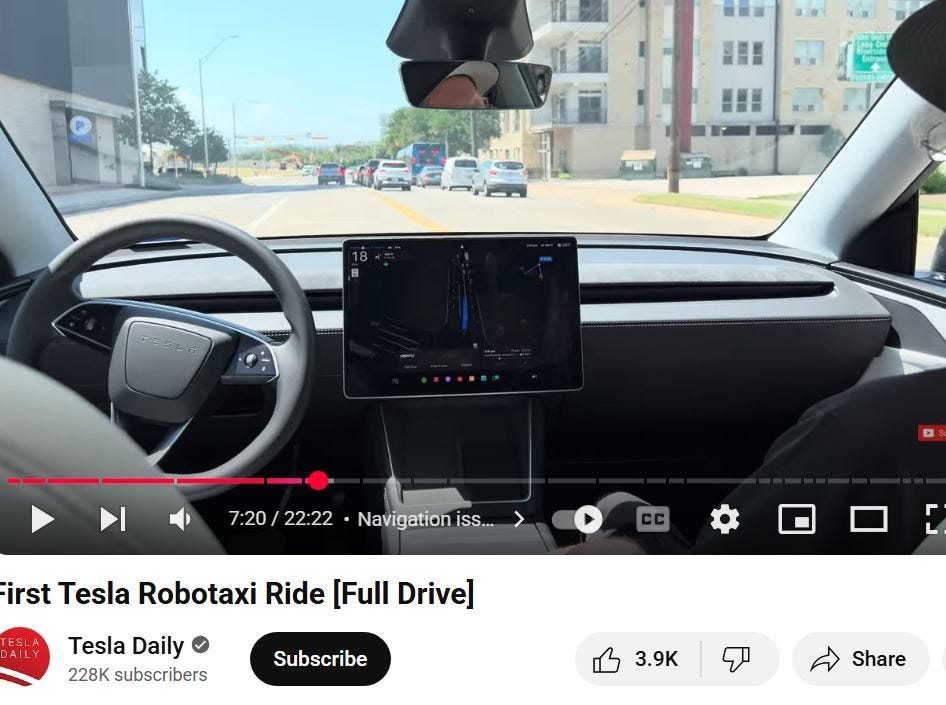

Videos of problematic Tesla robotaxi driving are making the rounds. The ones I find most interesting, so far, are these two:

Bailing out on a left turn and briefly driving in an opposing direction lane to queue for the next left turn. (Source of screen capture above.)

Front-seat manual trigger of in-lane stop to avoid being backed into by a delivery truck.

There are numerous other videos that I feel are less severe, but still inform the emerging conversation. Here’s one list I happened to see.

It is no surprise that we’re seeing videos of erratic robotaxi driving in Austin. After all, it’s still FSD, but with a more complicated supervising driver strategy. We know that FSD can be smooth sometimes, not so smooth others, and downright deadly once in a while if improperly supervised. What is changed is there is no longer a human driver in the driving seat to blame for failing to prevent a crash. So safety is going to be about whether there is effective supervision for the foreseeable future. (Someday FSD might be good enough to not need supervision of any kind. But that is no day soon.)

We have not seen enough to know how this is going to turn out, so this is a bullet point list instead of an essay.

Nobody has been hurt, yet. No substantive loss events, yet. But behaviors that, if exhibited by a human driver, we would consider problematic for safety.

The industry has been promising robotaxis that do not act as bad human drivers act, so that is the metric we should be applying rather than waiting for people to actually be hurt.

Safety for FSD depends significantly on having a human there to intervene when something goes wrong. This has not changed for Robotaxi FSD from what we can already see.

These are all mistakes we see human drivers make. But we were promised that robotaxis would not make human driving mistakes, so it is understandable that these videos are causing concern.

These are comparable to mistakes we saw when Waymo, Cruise, and other robotaxi platforms go on the road. I don’t have time today to dig up the archives, but there are embarrassing video clips for the others from the early (and not-so-early) days including driving in an opposing traffic lane. So at this point it is difficult to say Tesla is worse. Certainly they are under a microscope in a way that Cruise and Waymo never were (and that is saying something!).

Transparency is a bit worse for Tesla, but the rest of the industry has not exactly shared all their bad news in an unbiased way. That doesn’t make opacity right, but it is important perspective. Additionally, Texas requires essentially no transparency and essentially no regulatory oversight until there is a crash — for all companies, not just Tesla. (At least until September 1st when a new Texas law makes things somewhat better.)

Nobody has been hurt. Yet. The ultimate safety outcome will depend on Tesla having the prudence and restraint to limit how aggressively they scale up based on cleaning up the loose ends faster than they get created. Cruise got this spectacularly wrong. To date Waymo has net come out OK on this. Time will tell how Tesla does. It is reasonable based on history to expect Tesla will have an above-average risk appetite.

We still don’t know for sure what is going on with remote steering wheels. At this point there should be someone continuously remote monitoring every car every second it is moving and ready to jump in with a driver-quality steering wheel, brake, and accelerator pedal. Remote monitoring is technically difficult, especially due to control lag, but also due to limitations of situational awareness. But to not be doing FSD(remote supervised) 100% of the time at this stage is, in my opinion, courting disaster. We simply don’t know what’s going on here, and Tesla has told NHTSA its responses to those types of questions are proprietary.

The dude riding shotgun (so far all the videos I’ve seen are a dude) has limited ability to ensure safety.

For the UPS truck he evidently stopped in time to avoid a crash, regardless of blame. But why did the remote driver (if any) not intervene first?

For the left turn it looks like maybe a remote driver took over mid-turn (or not?) so the dude in front did not need to. But it still ran the wrong way in a lane, which is asking for trouble and should have been prevented by any remote driver involved.

The dude in front has a difficult-to-use braking interface which will not ensure safety in all cases. Stabbing a soft button in a swerving car in a crisis is a suboptimal safety interface. Moreover, if FSD takes an unsafe left turn with oncoming traffic, hitting the brakes ensures a collision. Grabbing the steering wheel across the front seats won’t help much either in some cases. It’s simply not an adequate arrangement to be a credible safety driver, which is why I have previously called that person a chaperone. (There are important, legit reasons for them, such as moving a stuck car. But they should not be mistaken for a safety driver.)

Folks can criticize FSD driving quality, but we should be completely unsurprised at what we are seeing. It’s still FSD, and still a very long way from being able to operate without supervision.

The elephant in the room for safety remains what the supervision strategy for FSD is. Given that FSD’s driving is making mistakes often enough to be on video this many times in a couple days, getting that wrong will almost certainly mean an injury crash or worse sooner rather than later. Let’s hope Tesla is getting it right.

My takeaway is that these video clips mean we should pay attention, and NHTSA has already said it is doing that. That’s the right answer.

It is too soon to reach a verdict. But clearly the situation is messy. The onus is on Tesla to get its house in order before they have a serious crash. With only a dozen or so vehicles on the road there is no room in the risk budget for a serious crash this early and at this small a deployment scale.

Reuters has an article that takes a look at various incidents that have been reported with Tesla robotaxi driving behavior in Austin:

https://www.reuters.com/business/autos-transportation/teslas-robotaxi-peppered-with-driving-mistakes-texas-tests-2025-06-25/

I have never heard this: "But we were promised that robotaxis would not make human driving mistakes" Please tell me who said this? I have honestly never heard it claimed by the self driving car guys. What I heard was that they would make fewer human driving mistakes, and that overall they would be safer. I find this complaint making up a lie to make a fallacious case. Sadly this kind of 'exaggeration' just sillifies the laws. Do we make it illegal to make any mistake a human might make? (Drunk or a rat shorting a wire?). In any event, the second measure is measurable: "Overall safer." Does Robotaxi work like uber in terms of getting a ride? With uber you have to say where you get picked up and where you are going. I would assume, like uber, sometimes you will be disappointed with no uber because the no uber driver will take the offer. I spent a lot of time on Ubers (or Lyfts) and learned where and why to have a friend drive me. (Never try to cross the river in Boston city proper to Cambridge or vice versa). Teslas were popular and a good FSD guy (/girl) was pretty amazing at how they could anticipate where the automation was likely to make mistakes. I am certain these systems must have a way to risk-evaluate routes. I would love to be able to order a robotaxi if it can find a safe(r) route. I know when I am driving, I often take the longer, less stressy, route just to avoid the possibility of something bad happening (like in Atlanta, DC, or Southern California). I can't wait to have a voice Grok for my robotaxi so I can ask questions and give advice. (Hi Phil).